Here’s a simple question: Is moderate drinking okay?

Like millions of Americans, I look forward to a glass of wine—sure, occasionally two—while cooking or eating dinner. I strongly believe that an ice-cold pilsner on a hot summer day is, to paraphrase Benjamin Franklin, suggestive evidence that a divine spirit exists and gets a kick out of seeing us buzzed.

But, like most people, I understand that booze isn’t medicine. I don’t consider a bottle of California cabernet to be the equivalent of a liquid statin. Drinking to excess is dangerous for our bodies and those around us. Having more than three or four drinks a night is strongly related to a host of diseases, including liver cirrhosis, and alcohol addiction is a scourge for those genetically predisposed to dependency.

If the evidence against heavy drinking is clear, the research on my wine-with-dinner habit is a wasteland of confusion and contradiction. This month, the U.S. surgeon general published a new recommendation that all alcohol come with a warning label indicating it increases the risk of cancer. Around the same time, a meta-analysis published by the National Academies of Sciences, Engineering, and Medicine concluded that moderate alcohol drinking is associated with a longer life. Many scientists scoffed at both of these headlines, claiming that the underlying studies are so flawed that to derive strong conclusions from them would be like trying to make a fine wine out of a bunch of supermarket grapes.

I’ve spent the past few weeks poring over studies, meta-analyses, and commentaries. I’ve crashed my web browser with an oversupply of research-paper tabs. I’ve spoken with researchers and then consulted with other scientists who disagreed with those researchers. And I’ve reached two conclusions. First, my seemingly simple question about moderate drinking may not have a simple answer. Second, I’m not making any plans to give up my nightly glass of wine.

Alcohol ambivalence has been with us for almost as long as alcohol. The notion that booze is enjoyable in small doses and hellish in excess was captured well by Eubulus, a Greek comic poet of the fourth century B.C.E., who wrote that although two bowls of wine brought “love and pleasure,” five led to “shouting,” nine led to “bile,” and 10 produced outright “madness, in that it makes people throw things.”

In the late 20th century, however, conventional wisdom lurched strongly toward the idea that moderate drinking was healthy, especially when the beverage of choice was red wine. In 1991, Morley Safer, a correspondent for CBS, recorded a segment of 60 Minutes titled “The French Paradox,” in which he pointed out that the French filled their stomachs with meat, oil, butter, and other sources of fat, yet managed to live long lives with lower rates of cardiovascular disease than their Northern European peers. “The answer to the riddle, the explanation of the paradox, may lie in this inviting glass” of red wine, Safer told viewers. Following the report, demand for red wine in the U.S. surged.

[Read: America has a drinking problem]

The notion that a glass of red wine every night is akin to medicine wasn’t just embraced by a gullible news media. It was assumed as a matter of scientific fact by many researchers. “The evidence amassed is sufficient to bracket skeptics of alcohol’s protective effects with the doubters of manned lunar landings and members of the flat-Earth society,” the behavioral psychologist and health researcher Tim Stockwell wrote in 2000.

Today, however, Stockwell is himself a flat-earther, so to speak. In the past 25 years, he has spent, he told me, “thousands and thousands of hours” reevaluating studies on alcohol and health. And now he’s convinced, as many other scientists are, that the supposed health benefits of moderate drinking were based on bad research and confounded variables.

A technical term for the so-called French paradox is the “J curve.” When you plot the number of drinks people consume along an X axis and their risk of dying along the Y axis, most observational studies show a shallow dip at about one drink a day for women and two drinks a day for men, suggesting protection against all-cause mortality. Then the line rises—and rises and rises—confirming the idea that excessive drinking is plainly unhealthy. The resulting graph looks like a J, hence the name.

The J-curve thesis suffers from many problems, Stockwell told me. It relies on faulty comparisons between moderate drinkers and nondrinkers. Moderate drinkers tend to be richer, healthier, and more social, while nondrinkers are a motley group that includes people who have never had alcohol (who tend to be poorer), people who quit drinking alcohol because they’re sick, and even recovering alcoholics. In short, many moderate drinkers are healthy for reasons that have nothing to do with drinking, and many nondrinkers are less healthy for reasons that have nothing to do with alcohol abstention.

[Read: Not just sober-curious, but neo-temperate]

When Stockwell and his fellow researchers threw out the observational studies that were beyond salvation and adjusted the rest to account for some of the confounders I listed above, “the J curve disappeared,” he told me. By some interpretations, even a small amount of alcohol—as little as three drinks a week—seemed to increase the risk of cancer and death.

The demise of the J curve is profoundly affecting public-health guidance. In 2011, Canada’s public-health agencies said that men could safely enjoy up to three oversize drinks a night with two abstinent days a week—about 15 drinks a week. In 2023, the Canadian Centre on Substance Use and Addiction revised its guidelines to define low-risk drinking as no more than two drinks a week.

Here’s my concern: The end of the J curve has made way for a new emerging conventional wisdom—that moderate drinking is seriously risky—that is also built on flawed studies and potentially overconfident conclusions. The pendulum is swinging from flawed “red wine is basically heart medicine!” TV segments to questionable warnings about the risk of moderate drinking and cancer. After all, we’re still dealing with observational studies that struggle to account for the differences between diverse groups.

[Read: Is a glass of wine harmless? Wrong question.]

In a widely read breakdown of alcohol-health research, the scientist and author Vinay Prasad wrote that the observational research on which scientists are still basing their conclusions suffers from a litany of “old data, shitty data, confounded data, weak definitions, measurement error, multiplicity, time-zero problems, and illogical results.” As he memorably summarized the problem: “A meta-analysis is like a juicer, it only tastes as good as what you put in.” Even folks like Stockwell who are trying to turn the flawed data into useful reviews are like well-meaning chefs, toiling in the kitchen, doing their best to make coq au vin out of a lot of chicken droppings.

The U.S. surgeon general’s new report on alcohol recommended adding a more “prominent” warning label on all alcoholic beverages about cancer risks. The top-line findings were startling. Alcohol contributes to about 100,000 cancer cases and 20,000 cancer deaths each year, the surgeon general said. The guiding motivation sounded honorable. About three-fourths of adults drink once or more a week, and fewer than half of them are aware of the relationship between alcohol and cancer risk.

But many studies linking alcohol to cancer risk are bedeviled by the confounding problems facing many observational studies. For example, a study can find a relationship between moderate alcohol consumption and breast-cancer detection, but moderate consumption is correlated with income, as is access to mammograms.

One of the best-established mechanisms for alcohol being related to cancer is that alcohol breaks down into acetaldehyde in the body, which binds to and damages DNA, increasing the risk that a new cell grows out of control and becomes a cancerous tumor. This mechanism has been demonstrated in animal studies. But, as Prasad points out, we don’t approve drugs based on animal studies alone; many drugs work in mice and fail in clinical trials in humans. Just because we observe a biological mechanism in mice doesn’t mean you should live your life based on the assumption that the same cellular dance is happening inside your body.

[Read: The truth about breast cancer and drinking red wine—or any alcohol]

I’m willing to believe, even in the absence of slam-dunk evidence, that alcohol increases the risk of developing certain types of cancer for certain people. But as the surgeon general’s report itself points out, it’s important to distinguish between “absolute” and “relative” risk. Owning a swimming pool dramatically increases the relative risk that somebody in the house will drown, but the absolute risk of drowning in your backyard swimming pool is blessedly low. In a similar way, some analyses have concluded that even moderate drinking can increase a person’s odds of getting mouth cancer by about 40 percent. But given that the lifetime absolute risk of developing mouth cancer is less than 1 percent, this means one drink a day increases the typical individual’s chance of developing mouth cancer by about 0.3 percentage points. The surgeon general reports that moderate drinking (say, one drink a night) increases the relative risk of breast cancer by 10 percent, but that merely raises the absolute lifetime risk of getting breast cancer from about 11 percent to about 13 percent. Assuming that the math is sound, I think that’s a good thing to know. But if you pass this information along to a friend, I think you can forgive them for saying: Sorry, I like my chardonnay more than I like your two percentage points with a low confidence interval.

Where does this leave us? Not so far from our ancient-Greek friend Eubulus. Thousands of years and hundreds of studies after the Greek poet observed the dubious benefits of too much wine, we have much more data without much more certainty.

In her review of the literature, the economist Emily Oster concluded that “alcohol isn’t especially good for your health.” I think she’s probably right. But life isn’t—or, at least, shouldn’t be—about avoiding every activity with a whisker of risk. Cookies are not good for your health, either, as Oster points out, but only the grouchiest doctors will instruct their healthy patients to foreswear Oreos. Even salubrious activities—trying to bench your bodyweight, getting in a car to hang out with a friend—incur the real possibility of injury.

[Read: A daily drink is almost certainly not going to hurt you]

An appreciation for uncertainty is nice, but it’s not very memorable. I wanted a takeaway about alcohol and health that I could repeat to a friend if they ever ask me to summarize this article in a sentence. So I pressed Tim Stockwell to define his most cautious conclusions in a memorable way, even if I thought he might be overconfident in his caution.

“One drink a day for men or women will reduce your life expectancy on average by about three months,” he said. Moderate drinkers should have in their mind that “every drink reduces your expected longevity by about five minutes.” (The risk compounds for heavier drinkers, he added. “If you drink at a heavier level, two or three drinks a day, that goes up to like 10, 15, 20 minutes per drink—not per drinking day, but per drink.”)

Every drink takes five minutes off your life. Maybe the thought scares you. Personally, I find great comfort in it—even as I suspect it suffers from the same flaws that plague this entire field. Several months ago, I spoke with the Stanford University scientist Euan Ashley, who studies the cellular effects of exercise. He has concluded that every minute of exercise adds five extra minutes of life.

When you put these two statistics together, you get this wonderful bit of rough longevity arithmetic: For moderate drinkers, every drink reduces your life by the same five minutes that one minute of exercise can add back. There’s a motto for healthy moderation: Have a drink? Have a jog.

Even this kind of arithmetic can miss a bigger point. To reduce our existence to a mere game of minutes gained and lost is to squeeze the life out of life. Alcohol is not like a vitamin or pill that we swiftly consume in the solitude of our bathrooms, which can be straightforwardly evaluated in controlled laboratory testing. At best, moderate alcohol consumption is enmeshed in activities that we share with other people: cooking, dinners, parties, celebrations, rituals, get-togethers—life! It is pleasure, and it is people. It is a social mortar for our age of social isolation.

[Read: The anti-social century]

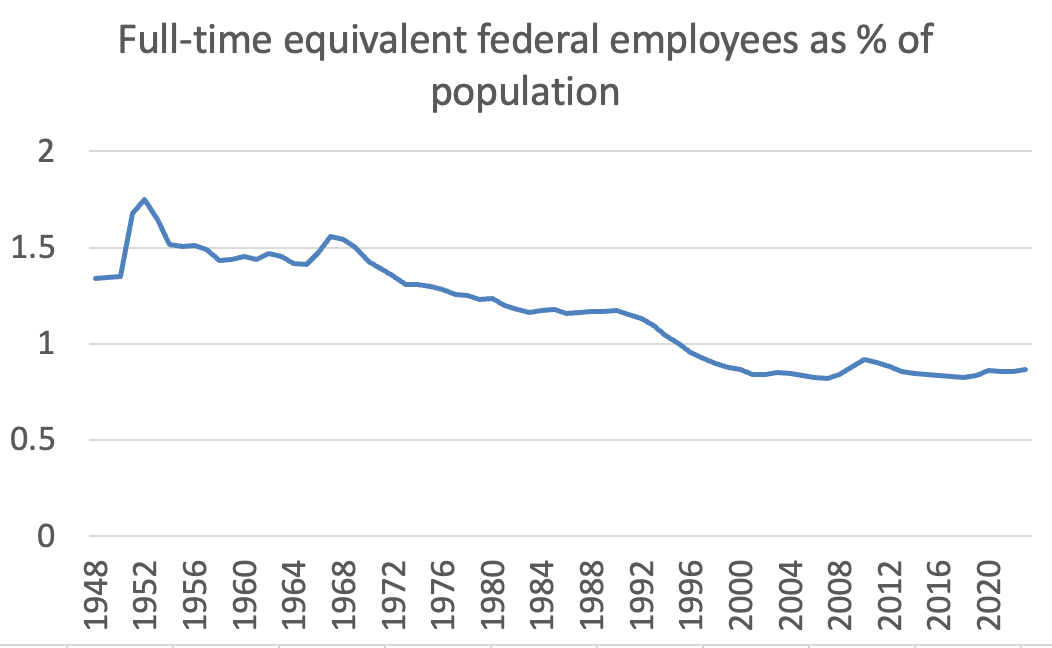

An underrated aspect of the surgeon general’s report is that it is following, rather than trailblazing, a national shift away from alcohol. As recently as 2005, Americans were more likely to say that alcohol was good for their health, instead of bad. Last year, they were more than five times as likely to say it was bad, instead of good. In the first seven months of 2024, alcohol sales volume declined for beer, wine, and spirits. The decline seemed especially pronounced among young people.

To the extent that alcohol carries a serious risk of excess and addiction, less booze in America seems purely positive. But for those without religious or personal objections, healthy drinking is social drinking, and the decline of alcohol seems related to the fact that Americans now spend less time in face-to-face socializing than any period in modern history. That some Americans are trading the blurry haze of intoxication for the crystal clarity of sobriety is a blessing for their minds and guts. But in some cases, they may be trading an ancient drug of socialization for the novel intoxicants of isolation.